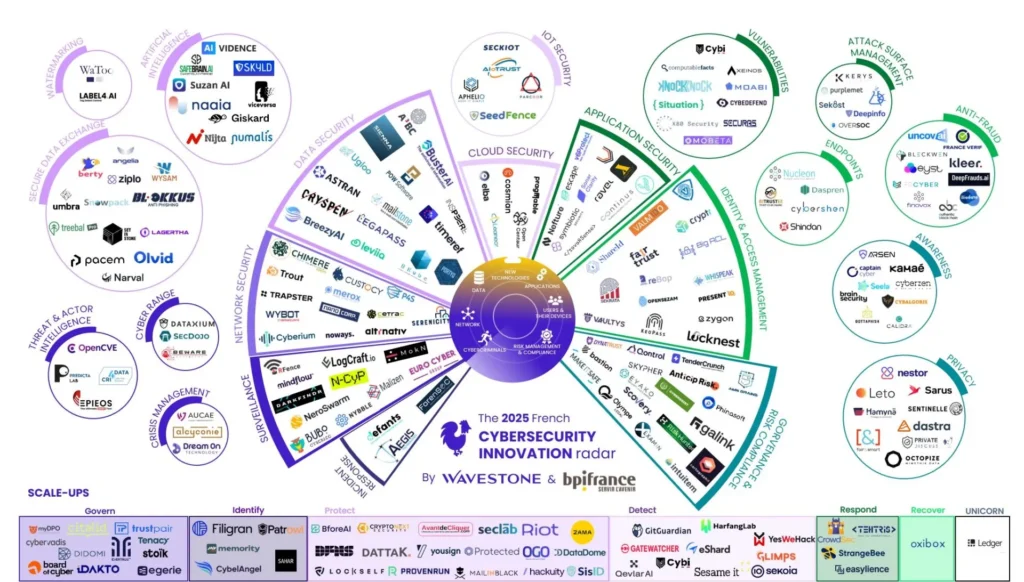

Naaia featured in Wavestone’s 2025 Radar of French Cybersecurity Startups

Renewed distinction We are delighted to announce that Naaia has been included, for the second consecutive year, in the Radar of French Cybersecurity Startups published by Wavestone, in partnership with Bpifrance. This recognition, in the Artificial Intelligence category, highlights the relevance of our vision and the strength of our solution within a thriving and innovative […]

Naaia joins La French Tech Grand Paris to promote a responsible AI 🚀

We are happy to announce a new important step for Naaia: our membership in La French Tech Grand Paris. This integration allows us to join a dynamic ecosystem, bringing together startups, investors, partners and institutional players who make French innovation shine at the national and international level. A strengthened commitment for a trustworthy AI At […]

Embedded AI and medical devices: steering a regulatory and strategic transformation

The integration of artificial intelligence into medical devices upends traditional logics of regulation. Between requirements of the medical device framework, constraints of the AI Act, governance of algorithmic risks and management of data (GDPR, Data Act), companies must rethink their compliance strategy in the face of a complex European framework combining constraints that are at […]

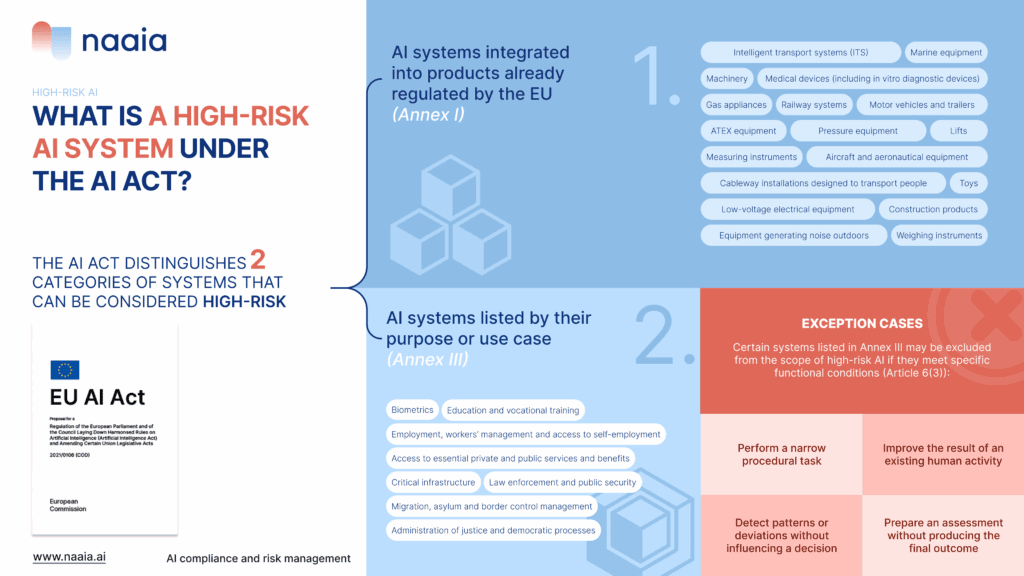

High-risk AI: Understanding the AI Act list to anticipate your obligations

The European regulation on artificial intelligence, the AI Act, introduces a risk-based approach to regulate the use of AI. It imposes specific requirements on high-risk AI systems, meaning those likely to have a significant impact on fundamental rights, health, or safety. A public consultation is currently open to clarify the classification of the systems concerned, possible exceptions, and […]