The European regulation on artificial intelligence, the AI Act, introduces a risk-based approach to regulate the use of AI. It imposes specific requirements on high-risk AI systems, meaning those likely to have a significant impact on fundamental rights, health, or safety. A public consultation is currently open to clarify the classification of the systems concerned, possible exceptions, and the obligations related to their placement on the market.

The AI Act List: Two Paths to Classify High-Risk AI

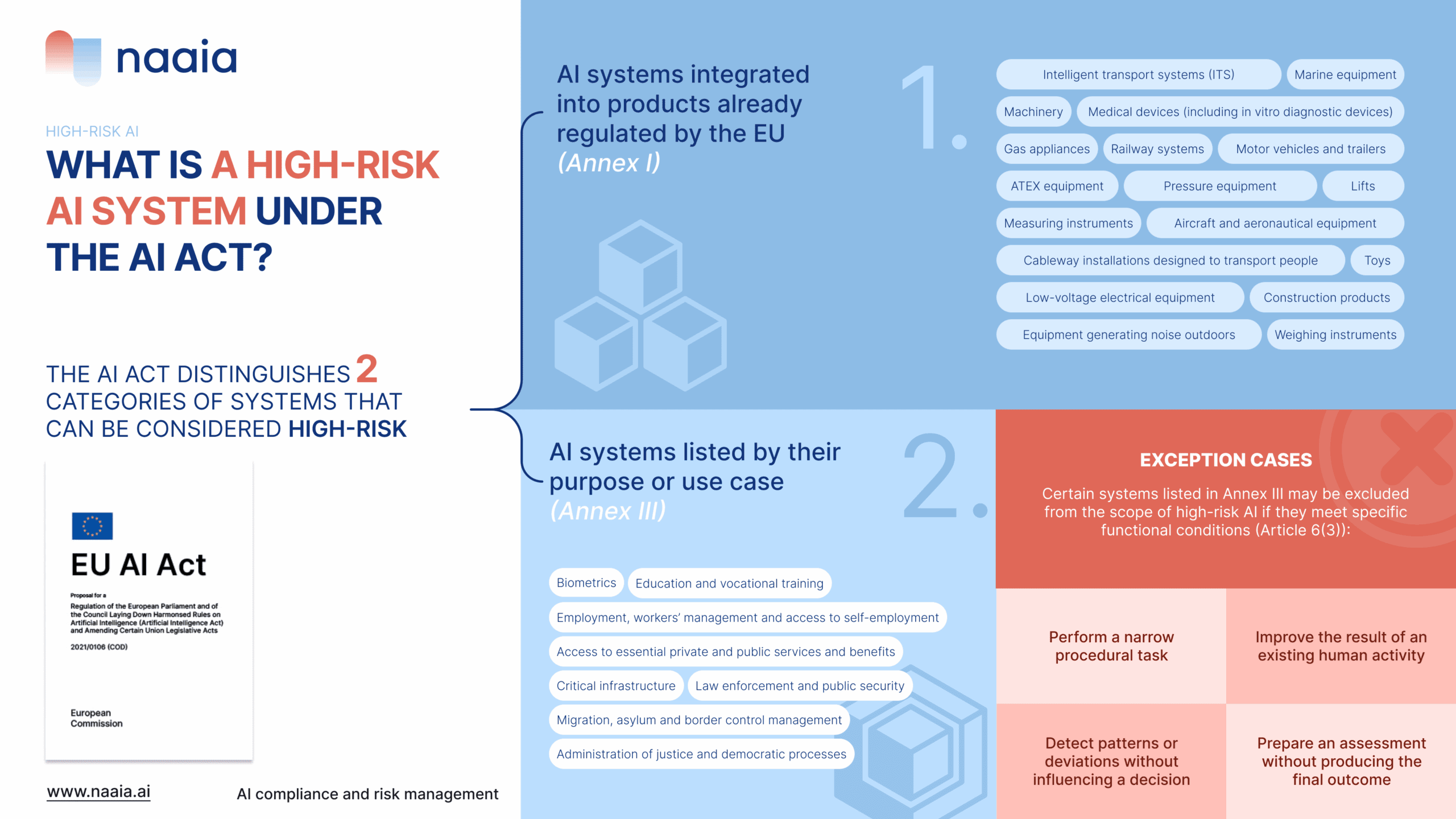

The AI Act distinguishes two categories of systems that can be considered high-risk:

- AI systems integrated into products already regulated by the EU (Annex I)

- AI systems listed based on their purpose or use case (Annex III)

In both cases, these systems are subject to the requirements of Title III of the regulation, particularly in terms of risk management, transparency, and human oversight.

1. Regulated AI systems: products covered by other European laws

Some products are already subject to a conformity assessment under sector-specific legislation. When an AI system is integrated into one of these products, it is automatically considered high-risk.

Targeted products include:

- Medical devices (including in vitro)

- Machinery

- Motor vehicles and trailers

- Railway systems

- Aircraft, aeronautical equipment

- Marine equipment

- Gas appliances

- Pressure equipment

- ATEX equipment

- Toys

- Low-voltage electrical equipment

- Weighing instruments

- Measuring instruments

- Lifts

- Construction products

- Personal protective equipment

- Cableway installations for passenger transport

- Outdoor noise-emitting equipment

- Intelligent Transport Systems (ITS)

These products are considered sensitive because their proper functioning has a direct impact on public safety or user rights.

2. Full list of high-risk AI use cases

Annex III of the AI Act regulation identifies eight categories of use cases for which AI systems are automatically considered high-risk, due to their potential impact on people’s rights, health, or safety. These areas cover sensitive functions in social, economic, or democratic life. Below is the complete list:

Category 1: Biometrics

- Remote biometric identification in real time or post-event in publicly accessible spaces

- Biometric categorization based on sensitive attributes (e.g., ethnic origin, sexual orientation, etc.)

- Emotion recognition from images or physiological signals

Category 2: Critical infrastructure

- AI systems used as safety components for managing the supply of water, electricity, gas, or heating

- Systems linked to road traffic management or critical digital infrastructure

Category 3: Education and vocational training

- Access or admission to educational institutions

- Learning outcome assessment, student guidance, personalization of learning paths

- Exam monitoring (detection of suspicious behavior)

Category 4: Employment, human resources, and access to self-employment

- Automated recruitment: job posting, pre-selection, candidate scoring

- HR decision-making: promotion, dismissal, task assignment

- Monitoring employee performance and behavior

Category 5: Access to essential services (public and private)

- Granting or withdrawing social benefits, healthcare, or public aid

- Credit scoring and creditworthiness assessment (excluding fraud detection)

- Risk assessment and pricing in life and health insurance

- Processing and prioritization of emergency calls

Category 6: Law enforcement and public security

- Risk assessment for victimization or reoffending

- Use of systems like polygraphs or lie detectors

- Evaluation of the reliability of evidence in investigations

- Predictive profiling (excluding fully automated profiling)

Category 7: Migration, asylum, and border control

- Security or immigration risk assessment

- Assistance in examining asylum, visa, or residence permit applications

- Automated person identification (excluding simple document checks)

Category 8: Justice and democratic processes

- Decision support for judges or alternative dispute resolution

- Systems aimed at directly influencing voting or electoral behavior

In all these cases, systems must comply with the strict obligations of Title III of the regulation, unless they meet the exemption conditions defined in Article 6(2).

3. Exemption cases: When AI Systems Are Not High-Risk

Some systems listed in Annex III may be excluded from the high risks AI systems scope if they meet specific functional conditions. The goal is to avoid overregulating low-impact technical uses. To qualify for this exception, an AI system must meet one of the following cases:

- Perform a narrow procedural task: A system that carries out a well-defined and low-stakes task, such as converting unstructured data into structured data, sorting incoming documents, or detecting duplicates.

- Enhance the output of a pre-existing human activity: AI is not autonomous in its decision but complements a human action, for example, reformulating a text in a professional tone or adapting it to an editorial style guide.

- Detect patterns or anomalies without influencing decisions: AI intervenes after the fact to flag possible anomalies, without modifying or directly influencing human judgment, e.g., analyzing grading inconsistencies from a teacher.

- Prepare an assessment without producing the final result: Applies to AIs that support upstream processing (indexing, translation, text or speech recognition) without participating in the final decision.

These systems must nevertheless undergo a rigorous assessment to verify that they have no decision-making autonomy and no direct impact on individuals’ rights.

AI Act Compliance: What Providers of High-Risk AI Must Do

Providers of high risk AI systems must comply with several key obligations to ensure alignment with the AI Act:

- Risk management throughout the system’s lifecycle (Article 9): implementation of a continuous, planned, and iterative process, addressing known or foreseeable risks.

- Comprehensive technical documentation

- Transparency toward users

- Appropriate human oversight

- A quality management system

The goal is to ensure that the system operates as intended, within a controlled framework, with safeguards for affected individuals.

Understanding the categories and obligations of high-risk systems is essential to anticipate regulatory compliance. The AI Act provides a clear framework but calls for rigorous functional analysis of each use case.

Public consultation statement : A public consultation is currently open to clarify the classification of the systems concerned, possible exemptions, and obligations related to their placement on the market.

Do you design or use a potentially high-risk AI?

Make sure you’re compliant with the AI Act, request a Naaia demo.